Vrije Universiteit Brussel

Probo the huggable robot platform.How is Probo Controlled?

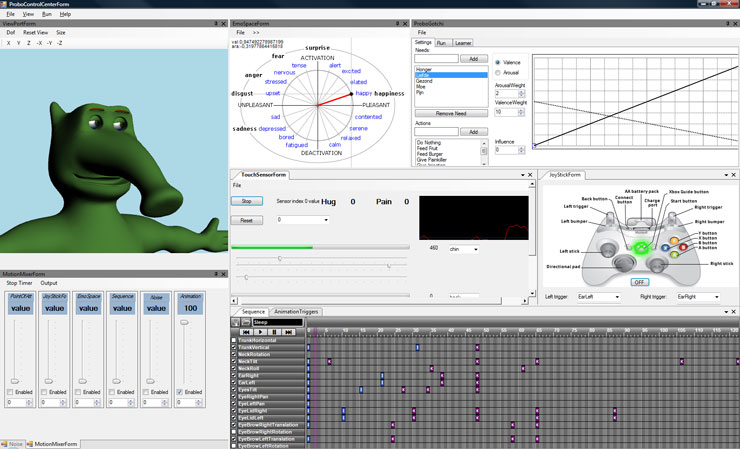

The modular software components are grouped in the Robotic User Interface (RUI) and the RUI is controlled by an operator (caregivers or researchers). At this stage there is a shared control between the operator evoking behaviors, emotions and scenarios, and the robot, performing intrinsic (preprogrammed) autonomous reactions. To realize a translation from emotions into facial expressions, emotions are parameterized. In our model two dimensions are used: valence and arousal to construct an emotion space. In the emotion space a Cartesian coordinate system is used. Each emotion can be represented as a vector with the origin of the coordinate system as initial point and the corresponding arousal-valence values as the terminal point. The direction of each vector defines the specific emotion whereas the magnitude defines the intensity of the emotion. Each basic emotion correspondent with a certain position of the motors to express the facial expressions as studied in the user test. Using this method, smooth and natural transitions between the different emotions is obtained. Up |

||||||||

©2009 • Vrije Universiteit Brussel • Dept. MECH • Pleinlaan 2 • 1050 Elsene • Tel.: +32-2-629.31.81 • Fax: +32-2-629.28.65 • webmaster